Nov 28

/

Katarzyna Truszkowska

Are We Testing the Right Things? Building a Culture of Trust in the Age of AI

In the past, when I used to invigilate Cambridge exams, we had extremely strict policies around digital devices. Smartphones, smartwatches, fitness bands - anything that could store or transmit information - had to be collected the moment exam takers arrived. Students placed their devices in labelled envelopes, signed them in, and didn't get them back until the end of the day. It was cumbersome but clear-cut. If it was digital, it wasn't allowed.

Yet I also remember my earlier years teaching 16+ students, when phone bans were controversial. Some teachers wanted to prohibit phones entirely, but we were told that phones were personal belongings and we had no right to confiscate them. That tension between safeguarding academic integrity and respecting student rights has never entirely gone away.

And now we arrive at something far more complicated: AI glasses.

Yet I also remember my earlier years teaching 16+ students, when phone bans were controversial. Some teachers wanted to prohibit phones entirely, but we were told that phones were personal belongings and we had no right to confiscate them. That tension between safeguarding academic integrity and respecting student rights has never entirely gone away.

And now we arrive at something far more complicated: AI glasses.

When the Invisible Becomes Impossible to Police

Smart glasses such as Meta's Ray-Ban models blur every line we used to rely on. Unlike a smartphone, they look like normal eyewear. Unlike a smartwatch, they don't announce themselves with glowing screens or notifications. And unlike earlier gadgets, the boundary between a "personal belonging" and a "potential cheating device" becomes much harder to define.

This raises quite a few questions.

Some students genuinely rely on corrective glasses. They have to wear them, and no policy can realistically deny that. But what happens when students who don't need vision correction start wearing AI-enabled glasses because they want live translation, hands-free assistance, or the convenience of an ever-present digital helper? And what about those who do need prescription glasses but choose the AI-enabled versions?

How do we distinguish who is using glasses for eyesight and who is using them for silent, invisible AI support?

For the first time, the tools that support learning can also silently undermine formal summative assessment.

We can't reasonably ask a student to remove their corrective glasses any more than we can ask them to remove a hearing aid. But AI glasses complicate that moral clarity. The line between necessity and advantage becomes blurred. The line between assistive technology and cheating begins to fade.

All of this makes it harder to build and maintain a culture of trust in schools and universities.

This raises quite a few questions.

Some students genuinely rely on corrective glasses. They have to wear them, and no policy can realistically deny that. But what happens when students who don't need vision correction start wearing AI-enabled glasses because they want live translation, hands-free assistance, or the convenience of an ever-present digital helper? And what about those who do need prescription glasses but choose the AI-enabled versions?

How do we distinguish who is using glasses for eyesight and who is using them for silent, invisible AI support?

For the first time, the tools that support learning can also silently undermine formal summative assessment.

We can't reasonably ask a student to remove their corrective glasses any more than we can ask them to remove a hearing aid. But AI glasses complicate that moral clarity. The line between necessity and advantage becomes blurred. The line between assistive technology and cheating begins to fade.

All of this makes it harder to build and maintain a culture of trust in schools and universities.

Are We Measuring the Right Things?

But perhaps AI is forcing us to confront a question we should have been asking all along.

Recent research by Newton et al. (2025) shows that ChatGPT-4o can score 94% on UK medical licensing exams and 89.9% on US medical boards, even on novel questions it's never seen.

If AI can pass our most rigorous professional examinations, what exactly are those exams measuring?If AI can demonstrate "knowledge" effectively, are we testing genuine understanding or just information retrieval?

So we have two choices. Either we build ever-higher walls around assessment, or fundamentally rethink what we're assessing and why.

But let's be clear. Building a culture of trust is not easy. And it's particularly difficult when we need to assess foundational skills—the ones at the bottom of Bloom's Taxonomy like remembering and understanding. These are hard to test using higher-order thinking methods, yet they're essential. You can't apply, analyse, or create without them.

This is where we need both secure assessment and a developmental framework for AI integration.

Recent research by Newton et al. (2025) shows that ChatGPT-4o can score 94% on UK medical licensing exams and 89.9% on US medical boards, even on novel questions it's never seen.

If AI can pass our most rigorous professional examinations, what exactly are those exams measuring?If AI can demonstrate "knowledge" effectively, are we testing genuine understanding or just information retrieval?

So we have two choices. Either we build ever-higher walls around assessment, or fundamentally rethink what we're assessing and why.

But let's be clear. Building a culture of trust is not easy. And it's particularly difficult when we need to assess foundational skills—the ones at the bottom of Bloom's Taxonomy like remembering and understanding. These are hard to test using higher-order thinking methods, yet they're essential. You can't apply, analyse, or create without them.

This is where we need both secure assessment and a developmental framework for AI integration.

Framework for AI Integration

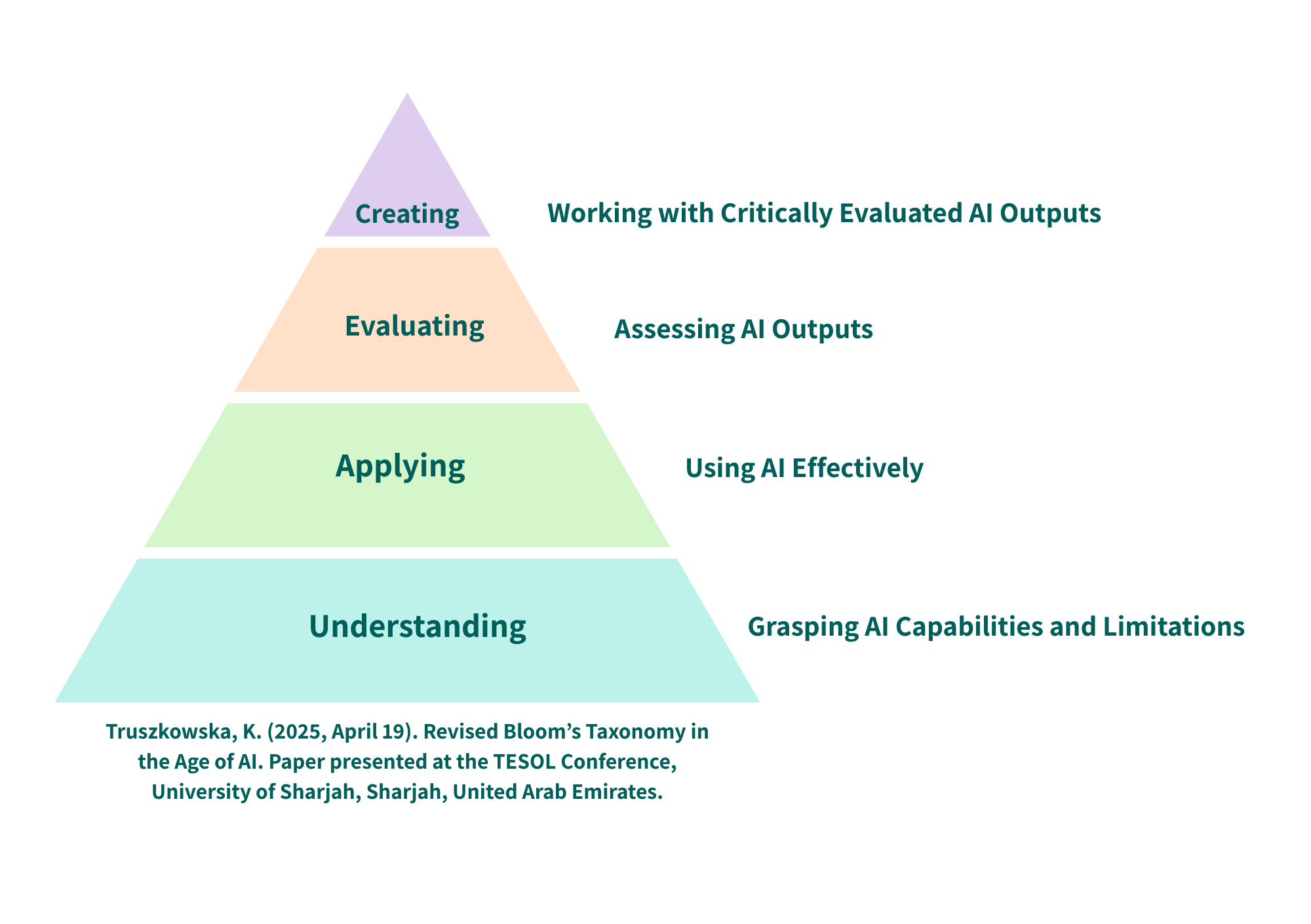

Rather than treating AI as simply a threat to be contained, we need a developmental approach that builds both competence and integrity. I've developed a four-stage framework based on Bloom's Taxonomy that addresses this challenge.

Stage 1: Understanding Students and staff develop AI literacy and recognize the limitations of tools like ChatGPT, which can generate convincing but inaccurate content (Quelle & Bovet, 2024). At this foundational stage, assessment needs secure environments. We must verify that students actually understand before they move forward.

Stage 2: Applying Clear policies guide appropriate AI use. Here, the AIAS framework (Perkins et al., 2024) becomes invaluable as students need explicit guidance about what's permitted.

Stage 3: Evaluating Students critically assess AI outputs for accuracy and reliability, reflecting emerging shifts in critical thinking (Lee et al., 2025). This is where AI becomes a learning tool as students learn to question, verify, and evaluate AI-generated content rather than accepting it blindly.

Stage 4: Creating AI supports idea generation, but final work must rely on original analysis, primary data, or independent synthesis to maintain academic integrity. Assessment at this level can embrace AI integration while still measuring genuine student capability.

This framework acknowledges what Newton's research makes clear. We cannot skip the foundational stages. But it also shows how AI can be progressively integrated as students demonstrate competence at each level.

Stage 2: Applying Clear policies guide appropriate AI use. Here, the AIAS framework (Perkins et al., 2024) becomes invaluable as students need explicit guidance about what's permitted.

Stage 3: Evaluating Students critically assess AI outputs for accuracy and reliability, reflecting emerging shifts in critical thinking (Lee et al., 2025). This is where AI becomes a learning tool as students learn to question, verify, and evaluate AI-generated content rather than accepting it blindly.

Stage 4: Creating AI supports idea generation, but final work must rely on original analysis, primary data, or independent synthesis to maintain academic integrity. Assessment at this level can embrace AI integration while still measuring genuine student capability.

This framework acknowledges what Newton's research makes clear. We cannot skip the foundational stages. But it also shows how AI can be progressively integrated as students demonstrate competence at each level.

The Path Forward

Building a culture of trust in the age of AI is not easy. It requires us to:

1. Acknowledge the challenge honestly - We cannot assess foundational knowledge (remembering, understanding) using only higher-order thinking methods. Some things need secure testing environments.

2. Be developmentally appropriate - Different stages of learning require different approaches to AI integration and assessment.

3. Maintain clarity - Students need to understand why some assessments are AI-free while others may integrate AI thoughtfully.

4. Build genuine partnership - Trust isn't demanded. It's earned through transparency, student participation in policy creation, and clear rationales for different approaches.

The strictness of those Cambridge exam protocols made sense and for foundational knowledge assessment, secure environments still make sense today. But as students progress through the framework, moving from understanding to creating, our approach can evolve with them.

The real question isn't "How do we stop students from cheating with AI?" It's "How do we guide students through a developmental journey where AI literacy, critical thinking, and academic integrity grow together?"

The answer might reshape not just how we prevent cheating, but how we structure learning itself, building competence and character in parallel, from foundation to creation.

1. Acknowledge the challenge honestly - We cannot assess foundational knowledge (remembering, understanding) using only higher-order thinking methods. Some things need secure testing environments.

2. Be developmentally appropriate - Different stages of learning require different approaches to AI integration and assessment.

3. Maintain clarity - Students need to understand why some assessments are AI-free while others may integrate AI thoughtfully.

4. Build genuine partnership - Trust isn't demanded. It's earned through transparency, student participation in policy creation, and clear rationales for different approaches.

The strictness of those Cambridge exam protocols made sense and for foundational knowledge assessment, secure environments still make sense today. But as students progress through the framework, moving from understanding to creating, our approach can evolve with them.

The real question isn't "How do we stop students from cheating with AI?" It's "How do we guide students through a developmental journey where AI literacy, critical thinking, and academic integrity grow together?"

The answer might reshape not just how we prevent cheating, but how we structure learning itself, building competence and character in parallel, from foundation to creation.

References

Deci, E. L., & Ryan, R. M. (2000). The "what" and "why" of goal pursuits: Human needs and the self-determination of behavior. Psychological Inquiry, 11(4), 227-268. https://doi.org/10.1207/S15327965PLI1104_01

McCabe, D. L., &

Treviño, L. K. (1993). Academic dishonesty: Honor codes and other contextual influences. The Journal of Higher Education, 64(5), 522-538.

McCabe, D. L., Treviño, L. K., & Butterfield, K. D. (1999). Academic integrity in honor code and non-honor code environments. The Journal of Higher Education, 70(2), 211-234.

McCabe, D. L., Treviño, L. K., & Butterfield, K. D. (2001). Honor codes and other contextual influences on academic integrity. Research in Higher Education, 42(4), 357-378.

Newton, P. M., Summers, C. J., Zaheer, U., et al. (2025). Can ChatGPT-4o really pass medical science exams? A pragmatic analysis using novel questions. Advances in Health Sciences Education, 30(1), 151-165.

Perkins, M., Furze, L., Roe, J., & MacVaugh, J. (2024). The Artificial Intelligence Assessment Scale (AIAS): A framework for ethical integration of generative AI in educational assessment. Journal of University Teaching and Learning Practice, 21(6).

Ryan, R. M., & Deci, E. L. (2000). Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. American Psychologist, 55(1), 68-78. https://doi.org/10.1037/0003-066X.55.1.68

Tatum, H. E. (2022). Honor codes and academic integrity: Three decades of research. Journal of College and Character, 23(1), 32-47.

Truszkowska, K. (2025, April 19). Revised Bloom’s Taxonomy in the Age of AI. Paper presented at the TESOL Conference, University of Sharjah, Sharjah, United Arab Emirates.

McCabe, D. L., &

Treviño, L. K. (1993). Academic dishonesty: Honor codes and other contextual influences. The Journal of Higher Education, 64(5), 522-538.

McCabe, D. L., Treviño, L. K., & Butterfield, K. D. (1999). Academic integrity in honor code and non-honor code environments. The Journal of Higher Education, 70(2), 211-234.

McCabe, D. L., Treviño, L. K., & Butterfield, K. D. (2001). Honor codes and other contextual influences on academic integrity. Research in Higher Education, 42(4), 357-378.

Newton, P. M., Summers, C. J., Zaheer, U., et al. (2025). Can ChatGPT-4o really pass medical science exams? A pragmatic analysis using novel questions. Advances in Health Sciences Education, 30(1), 151-165.

Perkins, M., Furze, L., Roe, J., & MacVaugh, J. (2024). The Artificial Intelligence Assessment Scale (AIAS): A framework for ethical integration of generative AI in educational assessment. Journal of University Teaching and Learning Practice, 21(6).

Ryan, R. M., & Deci, E. L. (2000). Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. American Psychologist, 55(1), 68-78. https://doi.org/10.1037/0003-066X.55.1.68

Tatum, H. E. (2022). Honor codes and academic integrity: Three decades of research. Journal of College and Character, 23(1), 32-47.

Truszkowska, K. (2025, April 19). Revised Bloom’s Taxonomy in the Age of AI. Paper presented at the TESOL Conference, University of Sharjah, Sharjah, United Arab Emirates.

Get in touch

-

Oxford Academy Of English Ltd

-

The Wheelhouse Angel Court, 81 St Clements, Oxford, OX4 1AW, UK

-

contact@oaoe.co.uk

-

+44 (0) 7356 030202

Our Newsletter

Get weekly updates on live streams, news, tips & tricks and more.

Thank you!

Copyright © 2025